You’ve taken a quick look, and decided that Sybase ASE Cluster Edition is right for you. Managing the POC requires a great deal of forethought & planning. Jeffrey Garbus shares some best practices to get you started.

You’ve taken a quick look, and decided that Sybase ASE

Cluster Edition is right for you. Chances are you’ve based your decision on one

of a relatively tight list of reasons:

- High availability

- Work-load consolidation

- Resource utilization

ASE CE takes advantage of shared-disk architecture, and

OS-level clustering to provide high availability clustering. This means that if

you are pointing to a “cluster” containing many servers, if one goes down, this

is transparent to the end users because another server within the cluster can

take over the workload, accessing the failed server’s databases on the SAN.

Work-load distribution, via the workload manager, enables

you to balance processing across multiple servers. Again, through the cluster

entry point, transparent to the users, ASE routes the requests to the correct

server (or to a random server for balancing purposes), once again making a single

server failure a nonissue from the users’ perspective, as well as allowing less

expensive resources to be used in a variety of situations.

The work-load distribution has a side benefit. You can also

consolidate servers, running individual servers at a higher utilization rate,

meaning that you can have several servers running at 60%, knowing that you have

many servers to take on any peak performance, rather than having to run

multiple servers each with enough peak potential.

So, decision made, it’s time to start thinking about the

proof-of-concept (POC). As with many IT projects, your most critical success

component will be planning.

Scoping the POC

The point of your POC is to demonstrate that the ASE CE will

do what you intend it to do, in your environment.

More specifically, you need to identify a simple,

quantifiable set of requirements. These may have to do with any of the areas

mentioned above (high availability, workload management, connection migration),

or may include ease of use of installation, backup/recovery, or performance.

In any case, you want to identify specific tests, and

specific success criteria. Put together specific, detailed test cases,

including POC objectives, functional specifications, and specific tests, all

with success criteria in a good, checklist format.

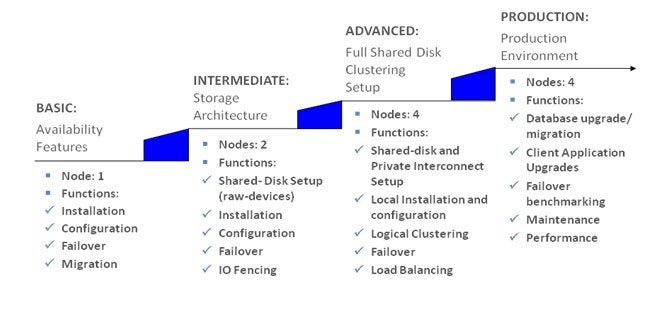

There are a variety of ways of defining the scope, but here

are a few basic levels:

Basic

You can check basic availability features. Use one node, and

test the entry-level functions:

- Installation

- Configuration

- Failover

- Migration

Intermediate

A step up from basic availability takes you a bit further.

With two nodes, you can test Storage architecture:

- Shared-disk setup (raw devices)

- Installation

- Configuration

- Failover

- IO fencing

Advanced

The next step up would likely test the full shared disk

cluster. Now, go up to 4 nodes, and work with:

- Shared-disk and private-interconnect setup

- Local installation and configuration

- Logical Clustering

- Failover

- Load Balancing

Production

Pushing the POC out to the most specific level, perhaps a

4-node test of your target production setup:

- Database upgrade / migration

- Client application upgrades

- Failover benchmarking

- Maintenance

- Performance

Planning the test bed

Test resources are often at a premium, so you need to make

your list early; are you testing on VMs? Real separate boxes? Once you’ve

established your success criteria during the scoping, you can work backwards to

make sure you have the right equipment.

Define the complexity of your test bed based upon those

criteria. You can run functional tests when you have multiple CE instances on a

single physical node, even though you may not be confident as to the results of

your failover tests. You can use partitioned nodes to work around resource

availability.

A simple approach to a true multi-node cluster would be two

nodes with a twisted-pair interconnect. For more (required) complexity you can

go to a 4-node cluster with high-speed network interconnects and shared

storage. Be sure to check the current supported hardware lists.

Use cases

Your use cases will become your checklist; your table should

be orchestrated along these lines:

|

Test |

Purpose |

Success Criteria |

|

Database Stability in a clustered configuration |

||

|

Power Off test |

Validate database survival in case of an abnormal shutdown |

|

|

Unplug a network cable |

CE isolation tests |

|

|

CE performance under a workload |

||

|

Large report performance |

Load Balancing |

|

|

Backup and recovery |

||

|

Replication |

||

|

Operations and maintainability | ||

|

Performance monitoring |

||

Other test areas may include installation, high availability

under a variety of circumstances, workload management, maintenance, job

scheduling, and anything else that has driven your decision to evaluate CE.

Hardware requirements

The supported hardware list changes on an occasional basis,

so make sure you check the Sybase web site to see what’s currently available.

At this writing, supported platforms include:

- X64 w/ RHEL, SUSE

- Sun SPARC w/ Solaris

- IBM AIX, HP-UX

- All cluster nodes of a single platform

- 12.5 to 12.5.3

- 15.0 to 15.0.3

Upgrades are supported from ASE versions:

Shared SAN storage is a requirement. The SAN must be

certified for raw devices on SAN. The SAN fabric must be multi-pathed. All the

data devices must be visible on all participating nodes. The disk subsystem

should support SCSI-3 persistent reservation.

Multiple network interface cards (NICs) are also mandatory;

you need both public access (from the clients) and high-speed private access

(for intra-node communications).

Within a node, the same OS and architecture is required.

Best Practices

-

Take the time to validate the ecosystem on the cluster prior to

beginning software installation - Storage

- Use only raw devices, not file system devices

- All CE devices must be multi-path for visibility on all nodes

-

Save additional space for the Quorum and local system tempdb on

each instance -

Do not use io fencing on the quorum device; it cannot share LUN

with other CE devices (SCSI IO fencing is implemented at the LUN level) -

Ensure IO complex setup is not at the expense of latency and

throughput. If available, benchmark non-CE latency and try to maintain similar

profile with CE - Network

-

Use at least one private network interface, in addition to the

public interfaces. Private network usage is critical, you will want to

configure for maximum throughput and the least latency. Perform visibility

tests to ensure that the private network actually is private - Installation

- Use UAF agents on all nodes

-

Connect ‘sybcluster’ to all the UAF agents to test the network

connectivity -

Generate the XML configuration for installation for future

reference & reuse - Post-installation

- First, test for cluster stability

-

Make sure the cluster is stable under all conditions; any

instability is likely due to ecosystem issues - Test any abnormal network, disk, or node outages

- Startup nuances

-

The cluster startup writes out a new configuration file.

Sometimes, due to timing, an individual node may need to be started up twice - Keep an eye on the Errorlog

-

The Errorlog file is going to be more

verbose because the cluster events add a great deal of information - Validate client connectivity to all nodes

-

Connection migration and failover process is sensitive to network

names; consistent DNS name resolution is critical path - Connect to all nodes form each client machine as part of the test

-

Inconsistent naming may cause silent redirection, causing the

workload distribution to be less evenly distributed than you want - Database load segmentation

-

Load segmentation is key, and should be seamless with Workload

Management -

Use happy case segmentation. This keeps writes to a database on

single nodes, spreads out reads if there is instance saturation -

Plan out failover scenarios and the consequent load management

implications -

Pay special attention to legacy APIs, which may not communicate

to the cluster, and therefore need upgrading

Installation and configuration

Most ASE configuration parameters will behave as you expect;

individual servers will still process individual queries. In general, you will

configure at the cluster level, and those configuration options will propagate

to individual servers. You may, if you choose, configure individual instances,

if for example all hardware doesn’t match. Each server will also get its own

system tempdb, which will be used by the quorum device.

The quorum contains the cluster configuration, which is in

turn managed with the qrmutil utility.

There are other configuration parameters for CE, you’ll

likely leave them at the defaults, but do take the time to read the release

bulleting for the individual operating systems for any changes you might

consider making.

Workload management

Workload management is a new concept for a lot of DBAs,

because in a standard ASE environment all the workload is directed at a single

db server. Some examples of workload management include using standby replicated

server for read-only queries.

Active load management requires metrics, and you are going

to have to take some time to determine how to measure and manage this activity.

You should expect a significant performance improvement with an active load segmentation

strategy.

Summary

ASE CE is a significant new feature to the Sybase suite.

Managing the POC requires a great deal of forethought & planning. We’ve

given you a bit of a starting point.

Additional Resources

Overview of Sybase ASE In-Memory Database Feature

Sybase Adaptive Server Enterprise Cluster Edition

Sybase ASE CLUSTER EDITION PROOF OF CONCEPT STRATEGIES

A 20-year veteran of Sybase ASE database administration, design, performance, and scaling, Jeff Garbus has written over a dozen books, many dozens of magazine articles, and has spoken at dozens of user’s groups on the subject over the years. He is CEO of Soaring Eagle Consulting, and can be reached at Jeff Garbus.