Archiving

historical database data is an important, complex and in most cases

forgotten task of database projects. Historical data has to be extracted and deleted from the

database as a part of the archiving procedure. Extracted data is kept for

several years as archived data, while the database retains only the actual,

active set of data. If we do not archive, the data volume will increase, and so will

the project costs. Just buying a new disk is not a permanent solution for

handling expanding archive data. An Oracle DBA must understand the application

model before stepping into implementing an archive procedure. A simple archive

plan has the following basic steps:

- Prepare data to be archived

- Extract and archive data to be deleted

- Delete historical data from database

In

this article, we will examine the problem of deleting historical data and

present some practical tips on how to execute and check the deleting

performance.

-

Highwather

Mark and Delete Operation -

Delete

Operation Measurements -

Code

Sample for Measurement Test -

Final

Recommendations - Conclusion

Highwater Mark and Delete

Operation

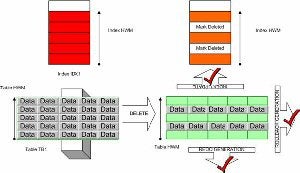

Highwater Mark (HWM)

For each

object, Oracle maintains a record of the highest relative block used to hold

data.

HWM

is the last data block formatted to receive data, not necessarily carrying

data. The table

highwater mark (HWM) is the number stored in the table segment header. Adding

new data to the table moves HWM to the next block, or to the last used block. Oracle

optimizer relies on the HWM during calculation for the full table scan, and on full table scans, Oracle optimizer will always scan all database

blocks

until the HWM pointer.

This highwater mark is maintained in multiples of five blocks. Oracle has provided a way to find

HWM for the table using the DBMS_SPACE package, which is not dependent on the

analyzed status of the table. Deleting data from the table leaves the HWM

unchanged. The next table insert will use the first free block on the free

block list, which is beyond the highwater mark causing the table to continue

growing.

Delete Operation

Delete command example:

SQL

> delete * from artist.tb1 where INSERT_DATE > TO_CHAR(’12-05.2001′);

SQL

> commit;

The

delete operation will scan the table until it reaches the HWM (Highwater Mark)

position and afterwards will remove records which match the desired where

condition. The deletion operation will not change the HWM point and the next

full table scan will scan again until the HWM, regardless of whether data exists

in the table or not. All the previous extent remains allocated for the table. When the matching column has an index on it, optimizer will most

likely scan only the index for the matching records.

All deleted entries from the index tree will be marked as deleted

but still retain allocated space in the index tree.

Oracle’s

internal mechanism will generate the rollback content to provide backup

information for deleted table data in case the operation is cancelled. On

commit, deletions are marked as permanent. The database

changes will be logged in the redo log file and transferred in the rollback

segments.

Delete

Operation Measurements

For

making performance measurements I choose Oracle database 9.0.1 Enterprise

Edition on the Sun Solaris operating system. Let’s assume in database schema ARTIST we have one big table ARTIST_TEST with

19856 records. From this table we are going to delete 6768 records using

following syntax:

delete from artist_test where SECOND_COLL=’JAVA CLASS’;

The table has only one index that is never

dropped or rebuilt. Before running every test we will make regular

compute-analysis of the table.

SQL code to rebuild table and prepare

system for each measurement:

SQL> create table artist_test (

2 no number, first_col number, second_coll varchar2(1000), rest varchar2(1000),

3 constraint artist_pk primary key (no)) tablespace artist

4 /

Table created.

SQL> insert into artist_test select rownum,OBJECT_ID,OBJECT_TYPE,rpad(‘H’,100) from all_objects;

19856 rows created.

SQL> commit;

Commit complete.

SQL> analyze table artist_test compute statistics;

Table analyzed.

SQL> alter system flush shared_pool;

System altered.

The Command ANALYZE TABLE will provide

fresh information for the optimizer and the command ALTER SYSTEM FLUSH

SHARED_POOL will erase all previously cached statements from the shared pool.

During a test, there is regular activity on the database that simulates the

real production system. To obtain as much precise information for own session

activity, a special view has been created named “V$ROGER” under sys account.

This view is just a link to the regular database dynamic performance view

“V$MYSTAT” provided by Oracle for collecting local session information.

SQL> create or replace view v$roger as select sn.name,ms.value

from v$mystat ms,v$statname sn where ms.statistic# = sn.statistic#;

View created.

SQL> create public synonym v$roger for v$roger

Synonym created.

SQL> grant select on v$roger to public;

Grant succeeded.

The

delete statement statistics are generated using special event 10046. Setting

this event will start tracing with level 8, generating in the trace file

standard SQL_TRACE information, bind values and wait events. Short description

for that event:

SQL> alter session set events ‘10046 trace name context forever, level 8’;

Session altered.10046 EVENT levels:

1 – Enable standard SQL_TRACE functionality (Default)

4 – As Level 1 PLUS trace bind values

8 – As Level 1 PLUS trace waits

This is especially useful for spotting latch wait etc.

but can also be used to spot full table scans and index scans.

12 – As Level 1 PLUS both trace bind values and waits

A

trace file will be processed with regular Oracle tool TKPROF using following

syntax:

# tkprof artist_ora_23641.trc sql_cost3.prf sort=exeela,fchela explain=artist/artist

Timing

information is generated using the database procedure GET_TIME from the DBMS_UTILITY

package provided by Oracle.

time_before:=DBMS_UTILITY.GET_TIME; time_after:=DBMS_UTILITY.GET_TIME; time_elapsed:=time_after-time_before;